※このブログは2023年4月18日に公開された英語ブログ「How TiDB Implements Point-in-Time Recovery」の拙訳です。

データベース製品にとって、ポイントインタイムリカバリ (PITR:point-in-time recovery) は極めて重要な機能です。PITRによって、ユーザーはデータベースを特定の時点にリカバリし、偶発的な損傷やユーザーエラーから保護することができます。例えば、データが誤って削除されたり破損した場合、PITRによってデータベースをその時点以前の状態にリカバリすることができ、重要なデータの損失を回避することができます。

この記事では、先進的なオープンソースの分散SQLデータベースであるTiDBでPITRがどのように機能しているかを探ります。また、PITRの安定性とパフォーマンスを最大化するために、PITRをどのように最適化したかについても説明します。

TiDBのPITRアーキテクチャ概要

TiDBには3つのコンポーネントがあります:

- SQLクエリレイヤーであるTiDB

- 分散ストレージエンジンであるTiKV

- TiDBの頭脳としてメタデータを管理するPD (Placement Driver)

TiDBはデータ変更のたびに、トランザクションID、タイムスタンプ、各変更の詳細を含む分散ログを生成します。PITRが有効な場合、TiDBはこれらのログをAWS S3、Azure Blob、ネットワークファイルシステム (NFS) などの外部ストレージに定期的に保存します。データが失われたり破損したりした場合、ユーザーはBackup and Restore (BR) ツールを使用して前回のデータベースのバックアップをリストアし、問題が発生した時点までに保存されたデータの変更を適用することができます。

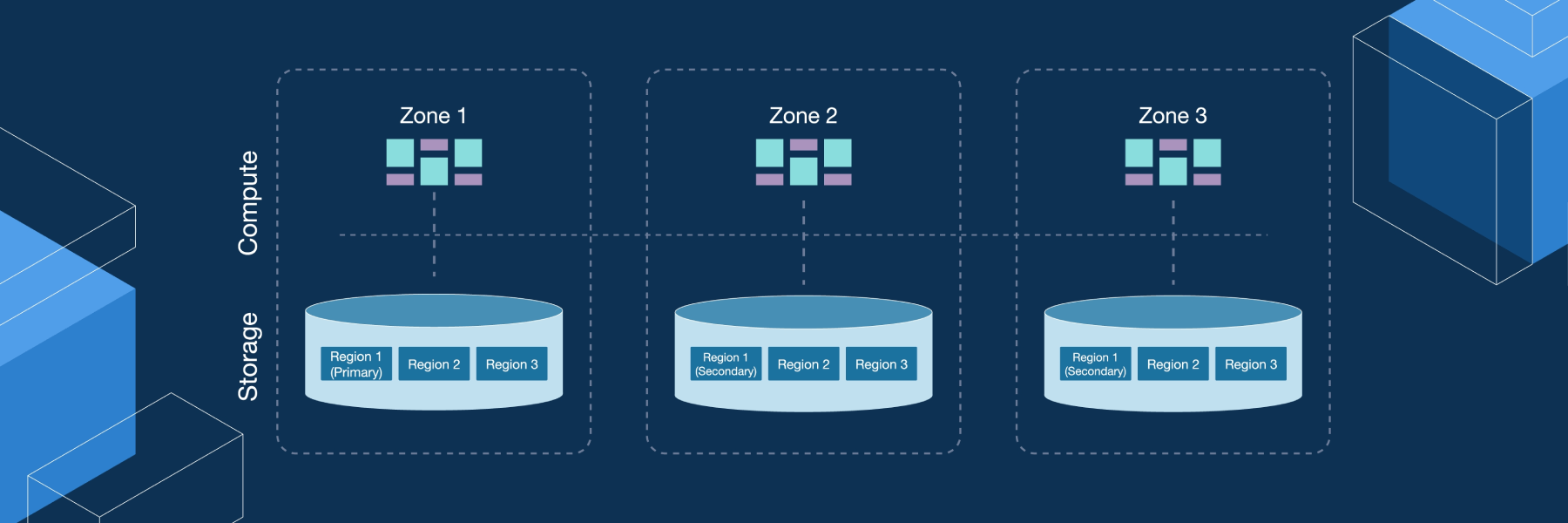

図1. TiDBのPITRアーキテクチャ

ユーザーがログバックアップを開始すると、BRツールはPDにバックアップタスクを登録します。同時に、TiDBノードがログバックアップのコーディネーターとして選択され、PDと定期的にやり取りしてグローバルバックアップチェックポイントのタイムスタンプを計算します。一方、各分散ストレージノードは定期的にバックアップタスクの状態をPDに報告し、指定された外部ストレージにデータ変更ログを送信します。

ユーザーがPITRリカバリコマンドを入力すると、BRツールがバックアップのメタデータを読み取り、すべてのTiKVノードにリカバリプロセスを開始するよう通知します。その後、各TiKVノードのリストアワーカーが指定された時点以前の変更ログを読み込み、クラスタに適用します。このようにして、特定の時点のTiDBクラスタを取得します。

ログのバックアップとリストアサイクル

それでは、TiDBのPITRでログのバックアップとリストアがどのように機能するのか、詳しく見ていきましょう。

ログのバックアップ

以下は、ログバックアッププロセスの主なインタラクションフローです。

Figure 2. Log backup in TiDB.

- BRはバックアップコマンドbr log startを受信します。バックアップタスクの開始時刻と保存場所を解析し、PDにログバックアップタスクを登録します。

- 各TiKVノードのログバックアップオブザーバーは、PDで作成または更新されたログバックアップタスクを監視し、バックアップ時間の範囲内でノード上の変更されたデータログをバックアップします。

- TiKVノードはKV変更ログをバックアップし、ローカルバックアップの進捗をTiDBに送信します。各TiKVノードのオブザーバーサービスは、KV変更ログのバックアップを継続し、PDから取得したglobal-checkpoint-tsを使用してバックアップメタデータ情報を生成します。その間同時に、ログのバックアップデータとメタデータをストレージに定期的にアップロードします。

- TiDBノードはグローバルなバックアップの進捗を計算し、保持します。TiDBコーディネーターノードはすべてのTiKVノードをポーリングして各リージョンのバックアップ進捗を取得し、各ノードの進捗に基づいて全体のログバックアップ進捗を計算し、PDにレポートします。

ログのリストア

ログのリストアプロセスは下図の通りです。

図3. TiDBにおけるログのリストア

- ユーザーが`br restore <timespot>`コマンドを開始すると、BRツールはバックアップアドレス、リストア時間、データベースオブジェクトを検証します。

- BRは全データをリストアし、既存のログバックアップデータを読み込みます。必要なログバックアップデータを計算した後、BRはPDにアクセスしてリージョンとKVレンジ情報を取得し、リストアログリクエストを作成し、対応するTiKVノードに送信します。

- TiKVノードはリストアワーカーを起動し、対応するバックアップデータをメディアからダウンロードし、必要な変更を対応するリージョンに適用します。

- リストアが完了すると、TiKVは結果をBRに返します。

PITRのTiDBへの最適化方法

ログのバックアップとリストアは非常に複雑です。TiDBでPITRが最初にリリースされて以来、私たちは安定性とパフォーマンスを向上させるために継続的に最適化してきました。例えば、最初のバージョンでは、ログのバックアップは大量の小さなファイルを生成していました。そのため、利用者にとって多くの問題が発生しました。最新バージョンでは、バックアップファイルは少なくとも128MBの複数のファイルに集約され、この問題を解決しています。

大規模なTiDBクラスタでは、フルバックアップに長い時間がかかることがよくあります。バックアップが中断され、再開できないことは、ユーザーをとても苛立たせることでしょう。TiDB6.5では、パフォーマンスを最適化した再開可能なバックアップをサポートするようになりました。現在、PITRは単一のTiKVクラスタを100MB/sの速度でバックアップでき、ソースクラスタのログバックアップの性能低下は5%以内に制限されています。これらの最適化により、ユーザーエクスペリエンスと大規模クラスタバックアップの成功率が大幅に向上しました。

通常、バックアップとリカバリはデータセキュリティの最後の防衛ラインであるため、PITRのRPOとRTOの指標もまた、多くのユーザーにとって重大な関心事です。我々は、PITRの安定性を向上させるために、以下のような多くの最適化を行いました。

- BR、PD、TiKV間の通信メカニズムを最適化。これにより、TiDBとTiKVのローリング再起動シナリオのほとんどの障害シナリオで、PITRは5分未満のRPOを維持できます。

- リカバリ性能を最適化し、ログ適用ステージで30GB/hの性能を達成し、RTO時間を大幅に短縮。

バックアップとリカバリのパフォーマンス指標については、TiDB Backup and Recovery Overview (英語) を参照してください。

まとめと今後の計画

PITRは、データ損失から保護するため、データベース製品に不可欠な機能です。PITRにより、TiDBユーザーは特定の時点までのデータを復旧することができ、失われるデータ量を最小限に抑え、ダウンタイムを短縮することができます。

将来的には、大規模クラスタに対するPITRのリカバリ性能をさらに最適化させる予定です。これにより、この機能の安定性とパフォーマンスが向上します。また、SQLステートメントによるバックアップとリカバリをサポートすることで、TiDBの安定性、信頼性、そして高性能なバックアップとリカバリソリューションを実現します。

TiDBでPITRを体験するには、コミュニティエディションまたはTiDB Cloud (無料トライアルがあるTiDB ServerlessではPITRはまだ対応しておりません) をお試しください。日本語ドキュメントのTiDBクイックスタートガイド、またはTiDB Cloudワークショップガイドのご利用をお勧めします。ご不明な点などございましたら、お問い合わせフォームよりご連絡ください。また、GitHubにて問題を報告することもできます。

TiDB Cloud Dedicated

TiDB Cloudのエンタープライズ版。

専用VPC上に構築された専有DBaaSでAWSとGoogle Cloudで利用可能。

TiDB Cloud Starter

TiDB Cloudのライト版。

TiDBの機能をフルマネージド環境で使用でき無料かつお客様の裁量で利用開始。