※このブログは2022年10月20日に公開された英語ブログ「How to Choose the Right Database for Your NFT Marketplace」の拙訳です。

NFT市場は活況です

NFT、またの名を非代替性トークンは、ブロックチェーンベースのデジタル資産です。交換可能で代替可能な2つの世界をリードする暗号通貨であるビットコインとイーサとは異なり、NFTは、絵画、歌、ビデオ、さらにはツイートなどのデジタルアイテムの一意の所有権を表します。コピー、置換、または細分化することはできませんが、保持、転送、および取引することはできます。

近年、NFT市場は活況を呈しています。 2021年だけで、NFTの取引高は170億ドル以上に達し、2020年と比べて21,000%増加しました。何万人ものアーティストがNFTアートワークを制作し、取引しました。 NFT取引のプラットフォームを提供するOpenseaやCoinbaseなどのNFTマーケットプレイスが雨後の筍のように出現しています。

NFTマーケットプレイスの従来のデータアーキテクチャ

NFTマーケットプレイスは、デジタルウォレット、スマートコントラクト、データベース、ブロックチェーンなどの複数のコンポーネントで構成されます。 最も重要なコンポーネントの1つはデータベースです。 大量のブロックチェーンデータを保存、処理、分析します。Web3またはNFTエコシステムへの新規参加者で、NFTプラットフォームを自分で構築する計画がある場合、データアーキテクチャをどのように設計し、どのようなデータベースを選択しますか?

従来のデータアーキテクチャには、少なくとも次の要素が必要です:

- ブロックチェーンサブスクライバー

イーサリアム、ソラナ、ポリゴン、BNB チェーンなどの複数のブロックチェーンでは毎秒膨大な量のNFTトランザクションが発生するため、これらすべてのNFTトランザクションを収集するにはブロックチェーンサブスクライバーが必要になります。

- Kafkaのようなメッセージキュー (MQ) システムとMQリーダー

Kafkaのようなメッセージ キューシステムは、ブロックチェーンから収集したNFTトランザクションデータを受信してチャネルするのに役立ちます。次に、MQリーダーはメッセージキュー内のメッセージを非同期に読み取り、分析データベースに一括挿入します。

- OLAPデータベース

Apache HiveとApache Sparkの組み合わせなどのオンライン分析処理 (OLAP) データベースは、NFTトランザクションを分析し、分析クエリに応答するのに役立ちます。

- OLTPデータベース

MySQLのようなオンライントランザクション処理 (OTLP) データベースは、OLAPデータベースから返された分析結果を保存し、NFTプラットフォームのWebサーバーからのリクエストを処理します。

- ウェブサーバー

NFTプラットフォームを実行するにはWebサーバーも必要です。

NFTマーケットプレイス向けの従来のデータアーキテクチャ

おそらく遭遇するであろう問題点

NFTマーケットプレイスに従来のデータシステムを実装、実行、運用する場合、おそらく次のような問題点に遭遇するでしょう。

- リアルタイムのNFT取引はほとんどできません。毎秒、多数のNFTアイテムが各ブロックチェーン上で作成され、取引されます。 NFTマーケットプレイスはNFT市場の最新情報を取得できるほど俊敏である必要があります。 プラットフォームの反応が速ければ速いほど、ユーザーはより正確なNFT取引をすることができます。しかし、既存のデータ アーキテクチャには複雑なテクノロジースタックを備えた長いデータパイプラインがあり、プラットフォームの俊敏性が損なわれています。

- ブロックチェーンに保存されるNFTデータのサイズは膨大であり、継続的に増加しています。主流のブロックチェーンからの既存のすべてのNFTトランザクションをそのメタデータとともに保存する場合、データが圧縮された後でも、少なくとも3テラバイトのストレージスペースが必要になります。ほとんどのデータウェアハウスはこのような膨大な量のデータを保持できますが、ほとんどのOLTPデータベースはそれが困難です。

- テクノロジースタックが複雑すぎます。従来のデータアーキテクチャを選択した場合、ブロックチェーンからNFTマーケットプレイスのストレージ システムに過去のNFTデータをインポートするためのビッグ データシステム (Apache Hiveとしましょう) が必要になります。 また、ブロックチェーンやNFT APIとは別にリアルタイムのトランザクションデータをサブスクライブし、それをメッセージキューに書き込み、ビッグデータ システムにバッチで書き込むための追加プログラムを構築する必要もあります。まだあります。Apache Sparkからの分析結果を保存するには、OLTPデータベースが必要です。これはコストがかかるだけでなく、プラットフォームの俊敏性も損ないます。

なぜTiDBなのか?

TiDBは、ハイブリッドトランザクションおよび分析処理 (HTAP) ワークロードをサポートするオープンソースの分散型MySQL互換データベースです。また、水平方向にスケーラブルで、強力な一貫性があり、可用性も高いです。

TiDBは次のことができるため、NFTマーケットプレイスの問題点を解決できます:

- リアルタイムの書き込みと分析の両方を処理します。TiDBはデータを行と列の両方の形式で保存し、データは強力な一貫性があります。 TiDBは高負荷の書き込みを受け入れながらリアルタイムデータを分析できます。NFTランキングなどの分析情報をリアルタイムに算出できます。

- 簡単にスケールアウトできます。 TiDBは急速に増大するブロックチェーンデータの量に適応するために、オンデマンドで水平にスケールアウトできます。

- テクノロジースタックを簡素化します。TiDBは従来のデータアーキテクチャのApache KafkaおよびHive部分を置き換え、高QPS書き込みを受け入れ、大量のデータを保存できます。 またMySQLの完全な代替品でもあり、分析結果をリアルタイムで直接クエリしてNFTマーケットプレイスに表示できます。TiDBを使用すると、最小限の実行可能な製品を迅速に開発してリリースできます。

- JSON形式をサポートします。NFTメタデータの最も一般的な形式はJSONです。TiDB はJSON形式を完全にサポートしているため、メタデータスキーマを事前定義せずに、ブロックチェーンデータを直接フェッチして保存できます。

NFTマーケットプレイス向けのTiDBを使用した新しいデータアーキテクチャ

TiDBのオンプレミスバージョンに加えて、TiDBのすべての優れた機能をクラウドに提供できるフルマネージドのDatabase-as-a-Service (DBaas) であるTiDB Cloudも試すことができます。 TiDB CloudはAWSとGoogle Cloudで利用できるようになりました。

TiDB CloudアーキテクチャとTiDB Cloudがアプリケーションをサポートする方法

主要なNFT参加者がTiDBを使用する方法

TiDBの採用者の1人は、NFT市場の主要な参加者です。彼らは数十のパブリックチェーンでNFT取引を追跡し、NFTユーザーがリアルタイムのNFT情報で投資機会を発見できるように支援します。

以前はデータウェアハウスを使用してすべてのNFTトランザクションデータを保存し、毎日のバッチ分析を行って、分析結果をOLTPデータベースに書き込んでいました。このデータソリューションを使用した場合、データの遅延は1日ほどになりました。データをTiDBに移行して以来、データの遅延は1日から数秒に短縮されました。データ量は3倍の約20テラバイトに増加しました。TiDBはその柔軟なスケーラビリティのおかげで、膨大なデータの増加を簡単にサポートできます。

今すぐNFTデモをお試しください!

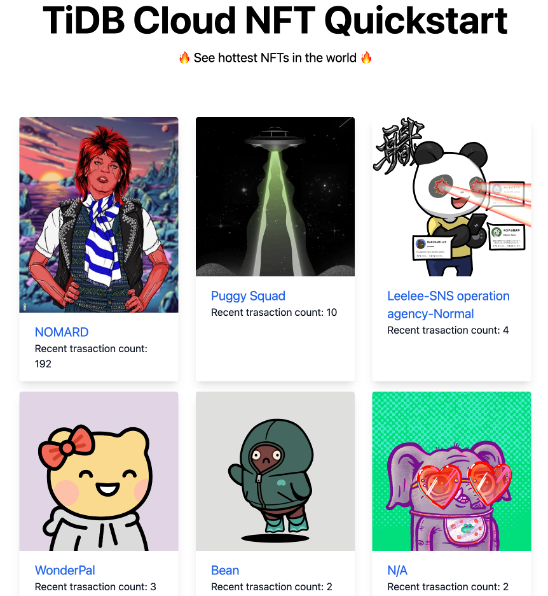

私たちはJavaScriptとTiDB Cloudを使用した簡単なNFTデモ–TiDB Cloud NFTクイックスタートを作成しました。 独自のNFT製品を開始する前に、まずこのデモを試して、TiDB Cloudで何ができるか、どのように実行されるかを学ぶことができます。

このデモでは次のことができます:

- イーサリアム上の過去のNFTトランザクションをスキャンし、リアルタイムのトランザクションを追跡します。

- NFT市場で最も人気のあるアイテムを閲覧してください。

NFTデモ: TiDB Cloud NFTクイックスタート

デモのソースコードに興味がある場合は、GitHubで見つけることができます。

私たちと連絡を取り合いましょう!

TiDBまたはTiDB Cloudにご興味がございましたら、弊社の営業スタッフまでお気軽にお問い合わせください。 SlackやTiDB Internalsのコミュニティに参加して意見を共有することもできます。 最新情報を入手するには、Twitter、LinkedIn、またはGitHubを忘れずにフォローしてください。

Keep reading:

How to Efficiently Choose the Right Database for Your Applications

Technical Paths to HTAP: GreenPlum and TiDB as Examples

How to Process and Analyze Billions of Rows of Data in a Single Table with TiDB on AWS

Try TiDB Serverless

- 25 GiB Free

- Auto-Scale with Ease

- MySQL Compatible

TiDB Cloud Dedicated

TiDB Cloudのエンタープライズ版。

専用VPC上に構築された専有DBaaSでAWSとGoogle Cloudで利用可能。

TiDB Cloud Starter

TiDB Cloudのライト版。

TiDBの機能をフルマネージド環境で使用でき無料かつお客様の裁量で利用開始。