※このブログは2024年10月20日に公開された英語ブログ「A Comprehensive Guide to TiDB’s Backup and Recovery Technology」の拙訳です。

誤った操作、悪意あるハッカーの攻撃、サーバーのハードウェア障害など、さまざまな理由でデータベースを使用中にデータ損失が発生する可能性があります。バックアップとリカバリ技術は、このような損失の後でもデータを復元して使用できるようにするための最終防衛ラインです。

TiDBはネイティブな分散データベースとして、さまざまなバックアップとリカバリの機能を完全にサポートしています。しかし、そのユニークなアーキテクチャにより、バックアップとリカバリの原理は従来のデータベースとは異なります。

この記事では、TiDBのバックアップとリカバリ機能の概要を説明します。

TiDBのバックアップ機能

TiDBには物理バックアップと論理バックアップの2種類があります。

物理バックアップは物理ファイル (拡張子.SST) を直接バックアップするもので、フルバックアップと増分バックアップに分けられます。論理バックアップはデータをバイナリファイルまたはテキストファイルにエクスポートします。物理バックアップは通常、バックアップ・データの一貫性を保証するために、大量のデータを含むクラスタレベルまたはデータベースレベルのバックアップに使用されます。

論理バックアップは、主に小規模なデータセットまたは少数のテーブルのフルバックアップに使用されますが、運用中の継続的なデータの一貫性は保証されません。

物理的バックアップ

物理バックアップは、フルバックアップと増分バックアップに分けられます。フルバックアップは「スナップショットバックアップ」とも呼ばれ、スナップショットによってデータの一貫性を確保します。増分バックアップは、現在のTiDBバージョンでは「ログバックアップ」とも呼ばれ、直近の期間のKV (Key Value) 変更ログをバックアップします。

スナップショットバックアップ

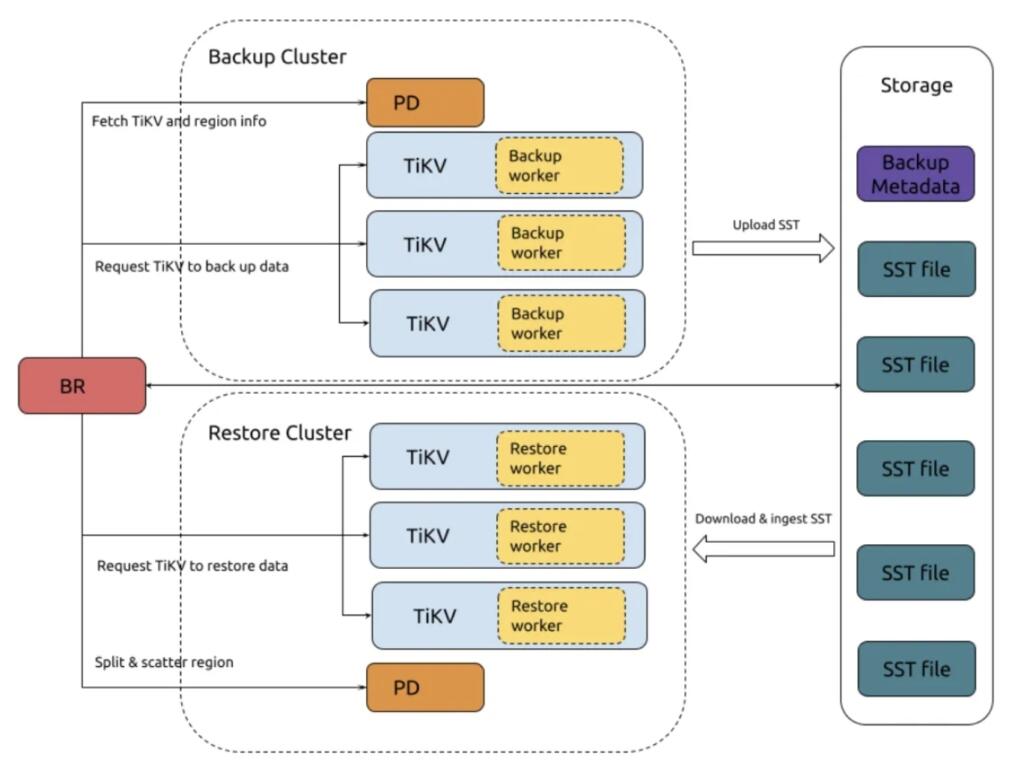

スナップショット・バックアップの全過程

- BRがバックアップコマンドを受信:

- BRは

br backup fullコマンドを受信し、バックアップスナップのショットポイントとバックアップのストレージアドレスを取得します。

- BRは

- BRバックアップデータのスケジュール:

- 具体的な手順:

- バックアップされたデータがガベージコレクション (GC) として回収されるのを防ぐためにGCを一時停止します。

- PDにアクセスし、バックアップするリージョンの分散情報とTiKVノード情報を取得します。

- バックアップ要求を作成しTiKVノードに送信します。これには

backup ts、バックアップするリージョン、バックアップのストレージアドレスが含まれます。

- 具体的な手順:

- TiKVがバックアップ要求を受け入れ、バックアップワーカーを初期化:

- TiKVノードはバックアップ要求を受信し、バックアップワーカーを初期化します。

- TiKVがデータをバックアップ:

- 具体的な手順:

- データを読み込む:バックアップワーカーは、リージョンリーダーから

backup tsに対応するデータを読み込みます。 - SSTファイルへの保存:データはSSTファイルとしてメモリに保存されます。

- SSTファイルをバックアップストレージにアップロードします。

- データを読み込む:バックアップワーカーは、リージョンリーダーから

- 具体的な手順:

- BRは各TiKVからバックアップ結果を取得します:

- BRは各TiKVノードからバックアップ結果を収集します。リージョンに変更があれば、処理を再試行します。再試行が不可能な場合、バックアップは失敗します。

- BRがメタデータをバックアップ:

- BRはテーブルスキーマをバックアップし、テーブルデータのチェックサムを計算、バックアップメタデータを生成し、バックアップストレージにアップロードします。

推奨される方法は、TiDBが提供する br コマンドラインツールを使用してスナップショットバックアップを実行することです。tiup install br でインストールできます。brをインストールした後、関連するコマンドを使用してスナップショットバックアップを実行できます。現在、スナップショットバックアップはクラスタレベル、データベースレベル、テーブルレベルのバックアップをサポートしています。以下は、クラスターのスナップショットバックアップにbrを使用する例です。

[tidb@tidb53 ~]$ tiup br backup full --pd "172.20.12.52:2679" --storage "local:///data1/backups" --ratelimit 128 --log-file backupfull.log

tiup is checking updates for component br ...

Starting component `br`: /home/tidb/.tiup/components/br/v7.6.0/br backup full --pd 172.20.12.52:2679 --storage local:///data1/backups --ratelimit 128 --log-file backupfull.log

Detail BR log in backupfull.log

[2024/03/05 10:19:27.437 +08:00] [WARN] [backup.go:311] ["setting `--ratelimit` and `--concurrency` at the same time, ignoring `--concurrency`: `--ratelimit` forces sequential (i.e. concurrency = 1) backup"] [ratelimit=134.2MB/s] [concurrency-specified=4]

Full Backup <----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------> 100.00%

Checksum <-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------> 100.00%

[2024/03/05 10:20:29.456 +08:00] [INFO] [collector.go:77] ["Full Backup success summary"] [total-ranges=207] [ranges-succeed=207] [ranges-failed=0] [backup-checksum=1.422780807s] [backup-fast-checksum=17.004817ms] [backup-total-ranges=161] [total-take=1m2.023929601s] [BackupTS=448162737288380420] [total-kv=25879266] [total-kv-size=3.587GB] [average-speed=57.82MB/s] [backup-data-size(after-compressed)=1.868GB] [Size=1867508767]

[tidb@tidb53 ~]$ ll /data1/backups/

总用量 468

drwxr-xr-x. 2 nfsnobody nfsnobody 20480 3月 5 10:20 1

drwxr-xr-x. 2 tidb tidb 12288 3月 5 10:20 4

drwxr-xr-x. 2 nfsnobody nfsnobody 12288 3月 5 10:20 5

-rw-r--r--. 1 nfsnobody nfsnobody 78 3月 5 10:19 backup.lock

-rw-r--r--. 1 nfsnobody nfsnobody 395 3月 5 10:20 backupmeta

-rw-r--r--. 1 nfsnobody nfsnobody 50848 3月 5 10:20 backupmeta.datafile.000000001

-rw-r--r--. 1 nfsnobody nfsnobody 365393 3月 5 10:20 backupmeta.schema.000000002

drwxrwxrwx. 3 nfsnobody nfsnobody 4096 3月 5 10:19 checkpoints--ratelimit パラメータは、各TiKVがバックアップタスクを実行できる最大速度を示し、単位は秒あたりのMiBです。 --log-file パラメータは、バックアップログを書き込むターゲットファイルを指定します。 --pd パラメータはPDノードを指定します。さらに、br コマンドはバックアップ・スナップショットに対応する物理的な時点を示す --backupts パラメータをサポートしています。このパラメータが指定されない場合、現在の時点がスナップショット時点として使用されます。

完了したスナップショットバックアップがいつバックアップセットから取得されたかを判断したい場合、br は対応するコマンド br validate decode も提供します。このコマンドの出力はTSO (Timestamp Oracle) です。以下のように、tidb_parse_tso を使用して物理時刻に変換することができます。

[tidb@tidb53 ~]$ tiup br validate decode --field="end-version" --storage "local:///data1/backups" | tail -n1

tiup is checking updates for component br ...

Starting component `br`: /home/tidb/.tiup/components/br/v7.6.0/br validate decode --field=end-version --storage local:///data1/backups

Detail BR log in /tmp/br.log.2024-03-05T10.24.25+0800

448162737288380420

mysql> select tidb_parse_tso(448162737288380420);

+------------------------------------+

| tidb_parse_tso(448162737288380420) |

+------------------------------------+

| 2024-03-05 10:19:28.489000 |

+------------------------------------+

1 row in set (0.01 sec)ログのバックアップ

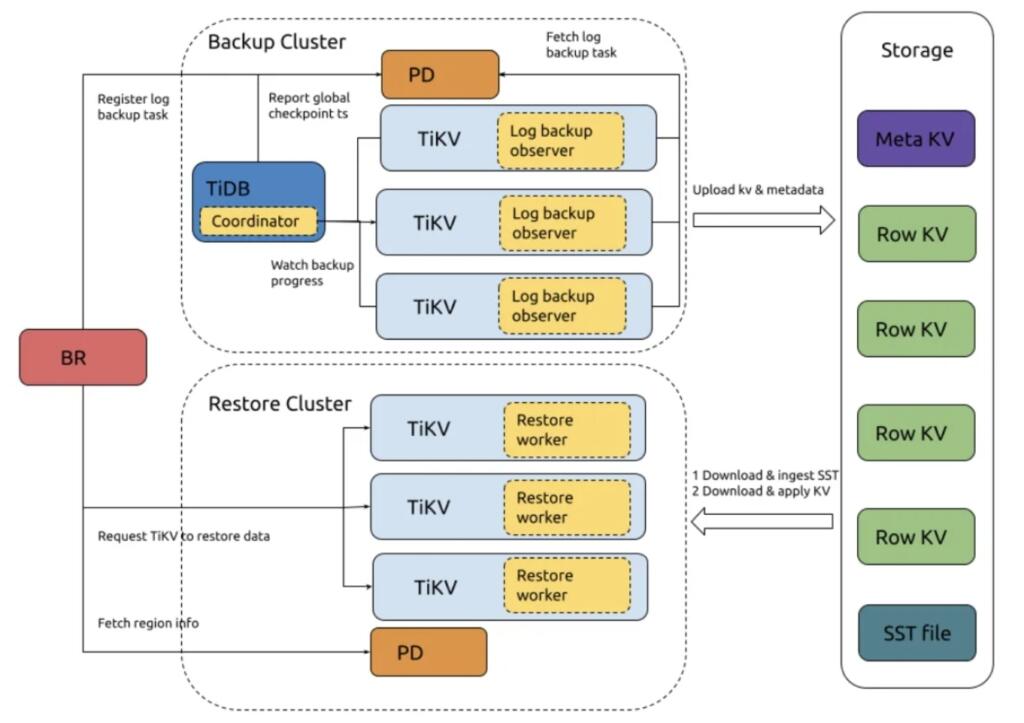

ログバックアップの全過程

- BRがバックアップコマンドを受信します:

- BRは

br log startコマンドを受信します。ログバックアップタスクのチェックポイントタイムスタンプとバックアップのストレージアドレスを解析して取得し、PDに登録します。

- BRは

- TiKVはログバックアップタスクの作成と更新を監視します:

- 各TiKVノードのログバックアップのオブザーバーは、PD内でのログバックアップタスクの作成および更新を監視し、そのノード内のバックアップ範囲に含まれるデータをバックアップします。

- TiKV ログバックアップのオブザーバーrはKVの変更ログを継続的にバックアップします:

- 具体的な手順は以下のとおり:

- KVデータの変更を読み取り、カスタムフォーマットのバックアップファイルに保存します。

- PDからグローバル・チェックポイントのタイムスタンプを定期的に照会します。

- 定期的にローカルメタデータを生成します。

- ログバックアップデータとローカルメタデータをバックアップストレージに定期的にアップロードします。

- バックアップされていないデータがガベージコレクションされないようにPDに要求します。

- 具体的な手順は以下のとおり:

- TiDB コーディネーターがログバックアップの進行状況を監視します:

- すべてのTiKVノードをポーリングして、各リージョンのバックアップ進捗状況を取得します。リージョンのチェックポイントタイムスタンプに基づいて、ログバックアップタスクの全体的な進捗が計算され、PDにアップロードされます。

- PD がログバックアップタスクのステータスを保存します:

- ログバックアップタスクのステータスは、

br log statusを使って照会できます。

- ログバックアップタスクのステータスは、

ログのバックアップ方法:

スナップショットバックアップコマンドは br backup ... で始まり、ログバックアップコマンドは br log .... で始まります。ログバックアップを開始するには、br log start コマンドを使用します。ログバックアップタスクを開始したら、 br log status を使用してログバックアップタスクのステータスを確認します。

[tidb@tidb53 ~]$ tiup br validate decode --field="end-version" --storage "local:///data1/backups" | tail -n1

tiup is checking updates for component br ...

Starting component `br`: /home/tidb/.tiup/components/br/v7.6.0/br validate decode --field=end-version --storage local:///data1/backups

Detail BR log in /tmp/br.log.2024-03-05T10.24.25+0800

448162737288380420

mysql> select tidb_parse_tso(448162737288380420);

+------------------------------------+

| tidb_parse_tso(448162737288380420) |

+------------------------------------+

| 2024-03-05 10:19:28.489000 |

+------------------------------------+

1 row in set (0.01 sec)上記のコマンドでは、 --task-name パラメーターはログバックアップタスクの名前を指定し、 --pd パラメーターはPDノードを指定、--storage パラメーターはログバックアップストレージアドレスを指定します。br log コマンドは、ログバックアップの開始時刻を指定する --start-ts パラメータもサポートしています。指定しない場合は、現在時刻が start-ts として設定されます。

[tidb@tidb53 ~]$ tiup br log status --task-name=pitr --pd "172.20.12.52:2679"

tiup is checking updates for component br ...

Starting component `br`: /home/tidb/.tiup/components/br/v7.6.0/br log status --task-name=pitr --pd 172.20.12.52:2679

Detail BR log in /tmp/br.log.2024-03-05T10.56.28+0800

● Total 1 Tasks.

> #1 <

name: pitr

status: ● NORMAL

start: 2024-03-05 10:50:52.939 +0800

end: 2090-11-18 22:07:45.624 +0800

storage: local:///data1/backups/pitr

speed(est.): 0.00 ops/s

checkpoint[global]: 2024-03-05 10:55:42.69 +0800; gap=47s

[tidb@tidb53 ~]$ tiup br log status --task-name=pitr --pd "172.20.12.52:2679"

tiup is checking updates for component br ...

Starting component `br`: /home/tidb/.tiup/components/br/v7.6.0/br log status --task-name=pitr --pd 172.20.12.52:2679

Detail BR log in /tmp/br.log.2024-03-05T10.58.57+0800

● Total 1 Tasks.

> #1 <

name: pitr

status: ● NORMAL

start: 2024-03-05 10:50:52.939 +0800

end: 2090-11-18 22:07:45.624 +0800

storage: local:///data1/backups/pitr

speed(est.): 0.00 ops/s

checkpoint[global]: 2024-03-05 10:58:07.74 +0800; gap=51s上記の出力は、ログのバックアップステータスが正常であることを示しています。出力を何度か比較すると、ログバックアップタスクがバックグラウンドで定期的に実行されていることがわかります。checkpoint [global] は、クラスタ内のすべてのデータがこのチェックポイント時間よりも前にバックアップストレージに保存されていることを示します。これは、バックアップデータをリストアできる最新の時刻を表します。

論理バックアップ

論理バックアップは、TiDBのSQL文やエクスポートツールを使用してデータを抽出するために利用できます。一般的なエクスポート方法に加えて、TiDBはDumplingというツールを提供しており、TiDBまたはMySQLに保存されているデータをSQLまたはCSV形式でエクスポートできます。Dumplingに関する詳細なドキュメント、Dumplingを使用してデータをエクスポートするを参照してください。Dumplingの典型的な例として、以下のような使い方があります。指定されたデータベースからすべての非システムテーブルデータをSQLファイル形式でエクスポートします。この例では、エクスポートには8つの並列スレッドを使用し、出力先ディレクトリを /tmp/test に設定、1ファイルあたり最大サイズを256MBに制限しています。また、1テーブル内での並列処理も行い、20万レコードごとにエクスポートファイルを分割して、速度を向上させています。

dumpling -u root -P 4000 -h 127.0.0.1 --filetype sql -t 8 -o /tmp/test -r 200000 -F256MiBTiDBのリカバリ機能

TiDBのリカバリは、物理バックアップに基づくリカバリと論理バックアップに基づくリカバリの2つに分けられます。物理バックアップに基づくリカバリは、br restore コマンドラインを使用してデータを復元する方法で、大規模な完全データ復元に一般的に使用されます。一方、論理バックアップに基づくリカバリは、Dumplingでエクスポートされたファイルなどのデータをクラスターにインポートする方法で、小規模なデータセットや数個のテーブルを対象とする場合に一般的に利用されます。

物理リカバリ

物理リカバリは、スナップショットバックアップリカバリとポイントインタイムリカバリ (PITR) の2種類に分けられます。スナップショットバックアップリカバリでは、バックアップストレージのパスを指定するだけで実行できます。一方、PITRでは、スナップショットやログバックアップデータを含むバックアップストレージのパスに加え、復旧したい時刻も指定する必要があります。

スナップショットバックアップのリカバリスナップショットリカバリの完全なプロセスは以下の通りです (上記のスナップショットバックアップの例に既に含まれています):

- BRがリストアコマンドを受信:

- BRは

br restoreコマンドを受信し、スナップショットのバックアップストレージのアドレスとリストアするオブジェクトを取得、リストアするオブジェクトが存在し、要件を満たしているかをチェックします。

- BRは

- BRがデータ復旧をスケジュール:

- 具体的な手順:

- リージョンの自動スケジューリングを無効にするようPDに要求します。

- バックアップデータのスキーマを読み込み、リストアします。

- バックアップデータ情報をもとにPDにリージョンの割り当てを依頼し、TiKVにリージョンを配布します。

- PDが割り当てたリージョンに基づき、TiKVにリストア要求を送信します。

- 具体的な手順:

- TiKVがリストア要求を受け入れ、リストアワーカーを初期化:

- TiKVノードはリストア要求を受信し、リストアワーカーを初期化します。

- TiKVがデータを復元:

- 具体的な手順:

- バックアップストレージからローカルマシンにデータをダウンロードします。

- リストアワーカーがバックアップデータkvを書き換えます (テーブルIDとインデックスIDを置き換えます)。

- 処理されたSSTファイルをRocksDBに注入します。

- リストア結果をBRに返します。

- 具体的な手順:

- BRは各TiKVからリストア結果を取得:

スナップショット・バックアップのリカバリ方法スナップショット・バックアップのリカバリは、クラスタ、データベース、およびテーブルの各レベルで実行できます。空のクラスタにリストアすることをお勧めします。リストアするオブジェクトがクラスタにすでに存在する場合、リストアはエラーになります (システムテーブルを除く)。以下はクラスタレベルのリストアの例です:

[tidb@tidb53 ~]$ tiup br restore full --pd "172.20.12.52:2679" --storage "local:///data1/backups"

tiup is checking updates for component br ...

Starting component `br`: /home/tidb/.tiup/components/br/v7.6.0/br restore full --pd 172.20.12.52:2679 --storage local:///data1/backups

Detail BR log in /tmp/br.log.2024-03-05T13.08.08+0800

Full Restore <---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------> 100.00%

[2024/03/05 13:08:27.918 +08:00] [INFO] [collector.go:77] ["Full Restore success summary"] [total-ranges=197] [ranges-succeed=197] [ranges-failed=0] [split-region=786.659µs] [restore-ranges=160] [total-take=19.347776543s] [RestoreTS=448165390238351361] [total-kv=25811349] [total-kv-size=3.561GB] [average-speed=184MB/s] [restore-data-size(after-compressed)=1.847GB] [Size=1846609490] [BackupTS=448162737288380420]単一のデータベースをリストアする場合は、--db パラメータをリストアコマンドに追加するだけです。単一のテーブルをリストアする場合は、--db パラメータと --table パラメータの両方をリストアコマンドに追加する必要があります。

PITR (Point-in-Time Recovery) PITRのコマンドは br restore point ... です。クラスタのリストアを初期化するには、--full-backup-storage パラメータを使用してスナップショットバックアップを指定し、スナップショットバックアップのストレージアドレスを示す必要があります。--restored-ts パラメータは、リストアする時点を指定します。このパラメータが指定されていない場合、リストアは回復可能な最新の時点で実行されます。さらに、ログバックアップデータのみをリストアする場合は、--start-ts パラメータを使用してログバックアップリストアの開始時点を指定する必要があります。

以下は、スナップショットリカバリを含むポイントインタイムリカバリの例です:

[tidb@tidb53 ~]$ tiup br restore point --pd "172.20.12.52:2679" --full-backup-storage "local:///data1/backups/fullbk" --storage "local:///data1/backups/pitr" --restored-ts "2024-03-05 13:38:28+0800"

tiup is checking updates for component br ...

Starting component `br`: /home/tidb/.tiup/components/br/v7.6.0/br restore point --pd 172.20.12.52:2679 --full-backup-storage local:///data1/backups/fullbk --storage local:///data1/backups/pitr --restored-ts 2024-03-05 13:38:28+0800

Detail BR log in /tmp/br.log.2024-03-05T13.45.02+0800

Full Restore <---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------> 100.00%

[2024/03/05 13:45:24.620 +08:00] [INFO] [collector.go:77] ["Full Restore success summary"] [total-ranges=111] [ranges-succeed=111] [ranges-failed=0] [split-region=644.837µs] [restore-ranges=75] [total-take=21.653726346s] [BackupTS=448165866711285765] [RestoreTS=448165971332694017] [total-kv=25811349] [total-kv-size=3.561GB] [average-speed=164.4MB/s] [restore-data-size(after-compressed)=1.846GB] [Size=1846489912]

Restore Meta Files <......................................................................................................................................................................................> 100%

Restore KV Files <........................................................................................................................................................................................> 100%

[2024/03/05 13:45:26.944 +08:00] [INFO] [collector.go:77] ["restore log success summary"] [total-take=2.323796546s] [restore-from=448165866711285765] [restore-to=448165867159552000] [restore-from="2024-03-05 13:38:26.29 +0800"] [restore-to="2024-03-05 13:38:28 +0800"] [total-kv-count=0] [skipped-kv-count-by-checkpoint=0] [total-size=0B] [skipped-size-by-checkpoint=0B] [average-speed=0B/s]論理リカバリ

論理リカバリはデータのインポートとも考えられます。一般的なSQLインポートに加え、TiDBはLightningツールを使用したデータのインポートをサポートしています。Lightningは静的ファイルからTiDBクラスタにデータをインポートするためのツールで、主に初期データのインポートに使用されます。

詳細については、公式ドキュメントTiDB Lightningの概要をご参照ください。

まとめ

このブログでは、TiDBのバックアップおよび復元機能と基本的な使用方法について詳しくまとめました。TiDBのバックアップおよび復元メカニズムは、データの安全性と一貫性を確保し、柔軟な戦略とツールサポートによって、さまざまな規模やシナリオのデータ保護に対応します。より詳細な技術情報や操作ガイドについては、PingCAPの公式ドキュメントを参照してください。

TiDB Cloud Dedicated

TiDB Cloudのエンタープライズ版。

専用VPC上に構築された専有DBaaSでAWSとGoogle Cloudで利用可能。

TiDB Cloud Starter

TiDB Cloudのライト版。

TiDBの機能をフルマネージド環境で使用でき無料かつお客様の裁量で利用開始。